Oculus Quest (2): to broadcast on TV, smartphone, tablet and PC

Oculus Quest (2): to broadcast on TV, smartphone, tablet and PC

Oculus Quest (2) offers the ability to stream content-transfer-VR on TVs, smartphones, tablets and computers. Thus, you can allow friends and family to view your virtual reality experience.

Officially there are three ways to stream content on VR devices: on smartphones and tablets, devices that support Chromecast, as well as on PC and MacOS devices with use Internet browser.

Streaming to smartphone or tablet

This option streaming can be run from the Oculus Quest, or from the Oculus app.

How to start to stream with Oculus Quest for smartphone and tablet:

- Make sure that the smartphone or tablet connected to the same Wi-Fi network as your Oculus Quest.

- Open the Oculus app on your smartphone or tablet.

- Click the Oculus on the right controller.

- Select "Share" (arrow symbol) in the main VR menu, and then select "Stream".

- Select "Oculus Application" and confirm with "Next".

- Confirm the Oculus application that you want to begin streaming, choose "Start a program".

- The red dot in VR indicates that it is streaming.

How to stop the stream:

- Click the Oculus on the right controller.

- Select "Share" (arrow) in the main menu VR.

- Select "Transfer" and "Complete transfer".

Streaming to the Chromecast

Google Chromecast is an accessory that connects to the TV via HDMI to stream content to your TV through Wi-Fi. Many modern televisions have built-in technology Chromecast. Otherwise you can buy accessories separately.

This option streaming can be run from the Oculus Quest, or from the Oculus app.

How to start to stream with Oculus Quest on TV:

- Make sure your Chromecast device or TV with Chromecast connected to the same Wi-Fi network that Oculus Quest.

- Click the Oculus on the right controller.

- Select "Share" (arrow symbol) in the main VR menu, and then select "Stream".

- Your Chromecast device or TV with Chromecast support should appear for selection. Select the appropriate option, and streaming should begin.

- The red dot in VR indicates that it is streaming.

How to stop the stream:

- Click the Oculus on the right controller.

- Select "Share" (arrow) in the main menu VR.

- Select "Transfer" and "Complete transfer".

If you want to cancel streaming the Oculus application, select the application "Stop broadcasting".

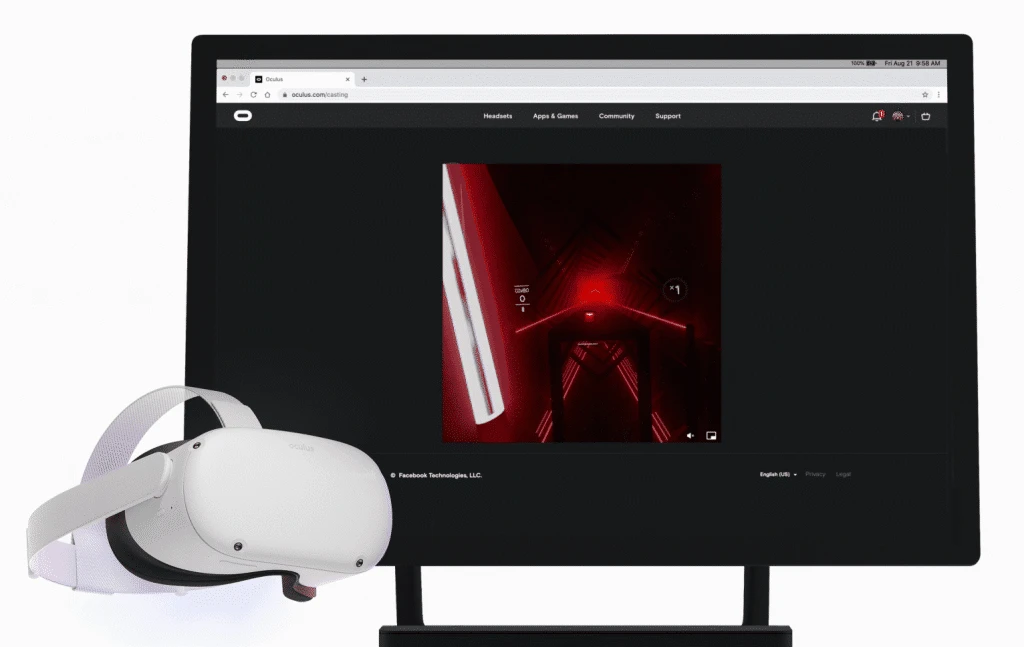

Streaming in the browser

This type of streaming uses Google Chrome or Microsoft Edge. Other browsers are currently not supported. Since Google Chrome running on MacOS, you can also stream on your iMac or Macbook.

How to start to stream with Oculus Quest in the browser:

- Make sure that the target device (Windows PC, a MacOS device) connected to the same wireless LAN that your Oculus Quest.

- On the target device , open a web site oculus.com/casting using Google Chrome or Microsoft Edge and sign in to your account Oculus or Facebook, if you haven't already.

- Click the Oculus on the right controller.

- Select "Share" (arrow symbol) in the main VR menu, and then select "Stream".

- Select "Computer" and confirm by pressing "Next" and "finish".

- The red dot in VR indicates that it is streaming.

- If you want activate full screen and audio output and adjust the volume.

How to stop the stream:

- Click the Oculus on the right controller.

- Select "Share" (arrow) in the main menu VR.

- Select "Transfer" and "Complete transfer".

Note: If a red control point to prevent you during streaming, you can easily disable it. Go to "Device" (Device) in the settings. At the bottom you can turn off "Display to video" (video recording display).

The advantages and disadvantages of streaming

The Chromecast currently are the best option for streaming: if you broadcast the VR content to a TV, you will have the big picture and usually a relatively good delay less than a second. This is sufficient for most VR applications, with the exception of dynamic music games such as Beat Saber and Pistol Whip.

A possible disadvantage of this option of streaming is that you need to retrofit your Chromecast if your TV does not support this technology by default. The latest model Chromecast with Google TV costs around $ 70.

It also gives you access to Netflix and other streaming services on your TV, and access to appropriate programs using the supplied remote control. Requires HDMI input on the TV.

If you have a TV that is compatible with Chromecast, and you don't want to invest money in its purchase, video streaming to your smartphone or tablet is the simplest solution. The biggest disadvantage of this type of streaming is a small display, usually higher latency and greater exposure to noise than the Chromecast.

Streaming through the browser - the second alternative Chromecast. It offers fairly low latency and good image quality, but can not (yet) display the VR in widescreen format.